Distribution Transformer,Electric Oil Type Distribution Transformer,Oil Type Distribution Transformer,Oil-Immersed Special Transformer Shaoxing AnFu Energy Equipment Co.Ltd , https://www.sxanfu.com

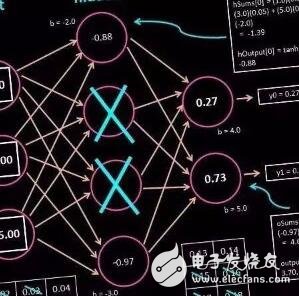

Deep learning neural network 11 common pitfalls and countermeasures

The author outlines 11 common challenges that may arise when building a neural network, such as data preprocessing, regularization, learning rate selection, activation function choice, and weight initialization. Each issue is accompanied by practical solutions and explanations, making this resource highly valuable for those working on deep learning projects.

**Problem Description**

When working with neural networks, it's crucial to think about how to properly normalize your data. This step is essential and cannot be overlooked—failing to do it correctly can make the network difficult or even impossible to train. Although normalization is a well-known concept in the deep learning community, it is rarely discussed in academic papers, which can lead to mistakes among beginners.

**How to Solve It?**

In general, normalization involves subtracting the mean of the data and dividing by its standard deviation. This is typically done separately for each input and output feature. However, you might want to standardize certain features across the entire dataset or focus on specific parts of the data depending on your needs.

**Why?**

Most neural networks assume that both input and output data have a mean of approximately zero and a standard deviation of around one. These assumptions are deeply embedded in the design of many deep learning techniques, from weight initialization to optimization algorithms.

**Also Need to Pay Attention**

Untrained neural networks usually produce outputs in the range of -1 to 1. If your target output falls outside this range (e.g., RGB images with values between 0 and 255), the network may become unstable during training. This can result in exploding gradients or wasted training time as the network tries to adjust its output to match the expected range. Normalizing the data (e.g., by dividing by 128 and subtracting 1) helps avoid these issues.

Feature scaling also plays an important role. If one feature has a much larger scale than others, it can dominate the network’s learning process. Some libraries automatically normalize features by subtracting the mean and dividing by the variance, but this isn't always appropriate. For example, if a feature has a very small range (e.g., 0.0 to 0.001), it may not need to be scaled. In such cases, careful consideration is needed to avoid introducing instability or NaN values.

**You Forgot to Check the Results**

**Problem Description**

After training your network for a few epochs and seeing the loss decrease, you might think everything is working. Unfortunately, there's a high chance that something is still wrong in your code. Issues could be in data preprocessing, the training loop, or inference. A decreasing loss doesn’t necessarily mean your network is learning meaningful patterns.

**How to Solve It?**

It’s crucial to verify the correctness of your data at every stage. Visualizing results is often the best way to check. For image data, this is straightforward, but for other types of data, you’ll need to find creative ways to validate the output. Comparing the results to ground truth data ensures that each part of the pipeline is functioning as intended.

**Why?**

Unlike traditional programming, machine learning systems often fail silently. You won’t get error messages like in regular software, so it’s up to you to carefully inspect the results and detect any anomalies early on.

**Also Need to Pay Attention**

Check what the reported loss actually means. Visualize the network’s predictions on the training set and compare them to the actual labels. Even if the loss decreases from 100 to 1, that might still be an unacceptable result. Always test the model on a validation set to ensure it generalizes well to unseen data. Make it a habit to visualize everything from the start—not just when things go wrong.

**You Forgot to Preprocess the Data**

**Problem Description**

Data can be tricky to work with. What seems similar in real-world terms might look completely different numerically. For instance, in character animation, the same action can appear very different depending on the position or direction. To address this, you need to represent the data in a way that captures similarity more effectively.

**How to Solve It?**

Consider what your features represent and whether a simple transformation can make similar inputs yield similar numerical values. Think about using a local coordinate system or a different representation (like a better color space) that aligns better with your task.

**Why?**

Neural networks assume that the input space is continuous, meaning that nearby points should represent similar concepts. Large discontinuities or groups of data that should be similar but aren’t can make learning harder.

**Also Need to Pay Attention**

Preprocessing can also help reduce redundancy. For example, if your network needs to learn the same action in multiple positions, it’s inefficient and can lead to overfitting. Proper preprocessing can simplify the learning process.

**Forget to Use Regularization**

**Problem Description**

Regularization—such as dropout, noise injection, or random processes—is essential for training neural networks. Even if you believe you have enough data or don’t expect overfitting, adding regularization is still recommended.

**How to Solve It?**

A basic approach is to add dropout before each linear layer (convolutional or dense). Start with a moderate dropout rate, like 0.75 or 0.9, and adjust based on overfitting.

**Why?**

Regularization helps prevent overfitting and improves generalization by introducing randomness into the training process. It can also speed up training and improve robustness to outliers.

**Also Need to Pay Attention**

Other forms of regularization, like data augmentation, can be equally effective. Dropout can also be viewed as a way to simulate a larger training set, helping the network generalize better.

**The Used Batch Is Too Large**

**Problem Description**

Using too large a batch size can reduce the randomness of gradient updates, potentially harming model performance.

**How to Solve It?**

Start with the smallest batch size that works for your hardware. Larger batches may require more training epochs to achieve the same accuracy.

**Why?**

Smaller batches introduce more variation in the weight updates, helping the model escape local minima and converge to flatter, more generalizable solutions.

**Also Need to Pay Attention**

Other factors, like image resolution, can affect the effective batch size. Be mindful of how much the gradient is averaged in each iteration.

**Learning Rate Is Incorrect**

**Problem Description**

An incorrect learning rate can severely impact training. Most frameworks use default settings that may not be optimal.

**How to Solve It?**

Turn off gradient clipping, find the highest learning rate that doesn’t cause instability, and set it slightly lower.

**Why?**

Gradient clipping can mask issues with the learning rate, making it harder to find the optimal value. Beginners often set the learning rate too high, leading to unstable training.

**Also Need to Pay Attention**

If your data is clean and the learning rate is correct, you may not need gradient clipping. If instability persists, consider other issues in your data.

**The Wrong Activation Function Was Used on the Last Layer**

**Problem Description**

Using an inappropriate activation function on the final layer can limit the network’s output range. For example, ReLU on the last layer will only produce positive outputs.

**How to Solve It?**

For regression tasks, avoid using an activation function on the final layer unless you have a specific reason. Use activation functions like sigmoid for bounded outputs or tanh for [-1, 1] ranges.

**Why?**

The final layer’s activation should reflect the nature of your output. If your data is unbounded, avoid activation functions that restrict the range.

**Also Need to Pay Attention**

Be cautious when using tanh or clipping on the final layer, as they can create vanishing gradients or make the network difficult to train.

**Bad Gradient in the Network**

**Problem Description**

ReLU can cause “dead neurons†due to poor gradient flow, which can hinder training or even prevent it altogether.

**How to Solve It?**

If training stagnates, try switching to leaky ReLU or ELU, which allow some gradient flow for negative inputs.

**Why?**

ReLU’s gradient is zero for negative inputs, which can lead to dead neurons. This can prevent the network from learning effectively.

**Also Need to Pay Attention**

Operations with zero gradients (like max or round) can also cause problems. Be cautious when using them in your model.

**Network Weights Are Not Initialized Correctly**

**Problem Description**

Incorrect weight initialization can prevent the network from training effectively. Using arbitrary values or zeros is not advisable.

**How to Solve It?**

Use well-established methods like He, LeCun, or Xavier initialization. These are widely tested and perform well in most cases.

**Why?**

Proper initialization ensures stable and efficient training. Custom initializations may lead to reproducibility issues or suboptimal performance.

**Also Need to Pay Attention**

Biases are typically initialized to zero, while other layers may have their own initialization rules. Pay attention to these details.

**The Neural Network You Use Is Too Deep**

**Problem Description**

Deeper networks are not always better. While they can improve performance on complex tasks, they can also be harder to train and may fail entirely if not handled carefully.

**How to Solve It?**

Start with a shallow network (3–8 layers) and only increase depth after ensuring it works properly.

**Why?**

Many improvements in deep learning apply better to smaller networks. If your network isn’t working, the issue is likely not the depth.

**Also Need to Pay Attention**

Starting with a small network allows faster experimentation and debugging. Depth should be added only after stability is achieved.

**The Number of Hidden Units Used Is Incorrect**

**Problem Description**

Too few hidden units may limit the network’s ability to learn, while too many can slow training and introduce noise.

**How to Solve It?**

Begin with 256–1024 hidden units and refer to similar applications for guidance. Adjust based on your specific needs.

**Why?**

The number of hidden units should be sufficient to capture the complexity of the task. More units may allow for better representations, especially when combined with dropout.

**Also Need to Pay Attention**

The number of hidden units is less critical than other factors like data quality and regularization. Experimentation is key once the network is working.